Databricks Lakehouse Platform

Taking data analysis to a new level with Databricks

See how you can maximize flexibility,

cost-effectiveness and scalability

What is Databricks?

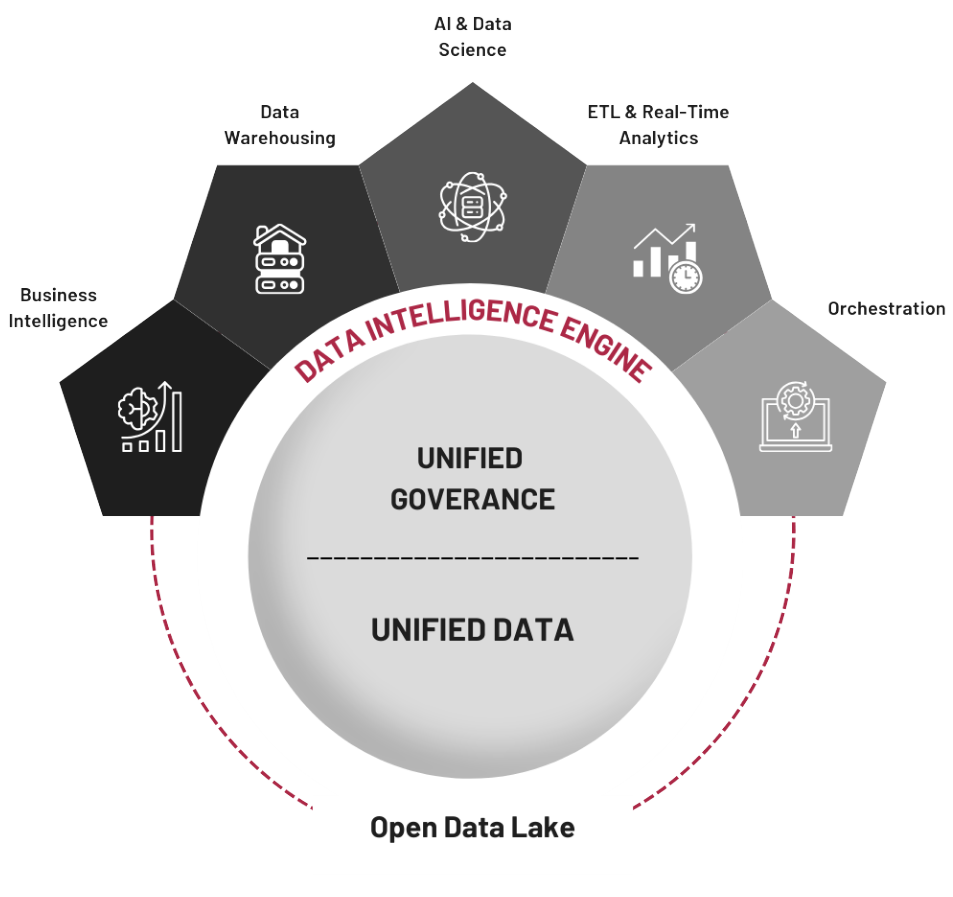

Databricks is a platform that is designed to work with large data sets, analytics and machine learning in the cloud. The whole is based on the integration of the high-performance Apache Spark data processing engine and the technology for managing and storing structured and unstructured data, Data Lake.

Compared to classic data warehouses (Data Warehouses), it is characterized by very high flexibility and scalability. Databricks combines the best of data warehouses while eliminating its biggest limitations related to scalability and data storage, creating a Lake House. Built on open standards and open source technologies, lakehouse simplifies data management, eliminating silos that have hindered data and AI.

What can we do for you?

Consultation and technical advice

![]() Business requirements analysis – identifying the company's goals and challenges to align Databricks technology with its needs.

Business requirements analysis – identifying the company's goals and challenges to align Databricks technology with its needs.

![]() Environment assessment – audit the data infrastructure to understand how best to deploy Databricks in the customer's current ecosystem.

Environment assessment – audit the data infrastructure to understand how best to deploy Databricks in the customer's current ecosystem.

![]() Architecture design – design the optimal architecture based on Databricks in a cloud or hybrid environment.

Architecture design – design the optimal architecture based on Databricks in a cloud or hybrid environment.

Implementation and configuration

![]() Integration with other analytical tools, data management systems (ETL), data warehouses and data sources.

Integration with other analytical tools, data management systems (ETL), data warehouses and data sources.

![]() Data flow management – development and implementation of data pipelines to enable efficient processing of large data sets in real time.

Data flow management – development and implementation of data pipelines to enable efficient processing of large data sets in real time.

![]() Real-time data processing – implementation of solutions for analyzing data streams (streaming) and processing batch data.

Real-time data processing – implementation of solutions for analyzing data streams (streaming) and processing batch data.

Data management and data modeling

![]() Data transformation and enrichment – optimizing and modeling data for better usability, including through the use of Machine Learning and AI techniques.

Data transformation and enrichment – optimizing and modeling data for better usability, including through the use of Machine Learning and AI techniques.

![]() Integration with BI (Business Intelligence) tools, such as Power BI, enabling the creation of reports and dashboards.

Integration with BI (Business Intelligence) tools, such as Power BI, enabling the creation of reports and dashboards.

Optimization and performance management

![]() Optimizing Apache Spark clusters – adjusting the configuration and management of Spark clusters to achieve the best performance and cost efficiency.

Optimizing Apache Spark clusters – adjusting the configuration and management of Spark clusters to achieve the best performance and cost efficiency.

![]() Resource monitoring and scaling – adjust cloud resources according to workloads to reduce costs and improve efficiency.

Resource monitoring and scaling – adjust cloud resources according to workloads to reduce costs and improve efficiency.

Scalability and efficiency

Databricks enables massive amounts of data to be processed in the cloud, allowing companies to scale their operations without investing in costly infrastructure.

Integration with cloud tools

Databricks integrates with leading cloud services such as Azure, AWS and Google Cloud, making it significantly easier to manage data and computing resources.

Advanced security features

The platform provides advanced security features such as access control, data encryption and regulatory compliance, which protects corporate data and ensures its confidentiality.

BENEFITS

DATABRICKS

Cost optimization

With flexible management of computing resources, companies can optimize operating costs by paying only for the resources actually used.

Fast data processing and process automation

Databricks uses technologies such as Apache Spark, which allows for fast processing and analysis of data, even for very large data sets. In addition, Databricks enables task automation through scheduling scripts and data pipelines, which increases operational efficiency and reduces the risk of human error.

Cooperation in teams

Databricks offers interactive notebooks that enable teams to collaborate in real time, speeding up decision-making and making it easier to share analysis results.

BENEFITS

DATABRICKS

Scalability and efficiency

Databricks enables massive amounts of data to be processed in the cloud, allowing companies to scale their operations without investing in costly infrastructure.

Integration with cloud tools

Databricks integrates with leading cloud services such as Azure, AWS and Google Cloud, making it significantly easier to manage data and computing resources.

Advanced security features

The platform provides advanced security features such as access control, data encryption and regulatory compliance, which protects corporate data and ensures its confidentiality.

Cost optimization

With flexible management of computing resources, companies can optimize operating costs by paying only for the resources actually used.

Fast data processing and process automation

Databricks uses technologies such as Apache Spark, which allows for fast processing and analysis of data, even for very large data sets. In addition, Databricks enables task automation through scheduling scripts and data pipelines, which increases operational efficiency and reduces the risk of human error.

Cooperation in teams

Databricks offers interactive notebooks that enable teams to collaborate in real time, speeding up decision-making and making it easier to share analysis results.